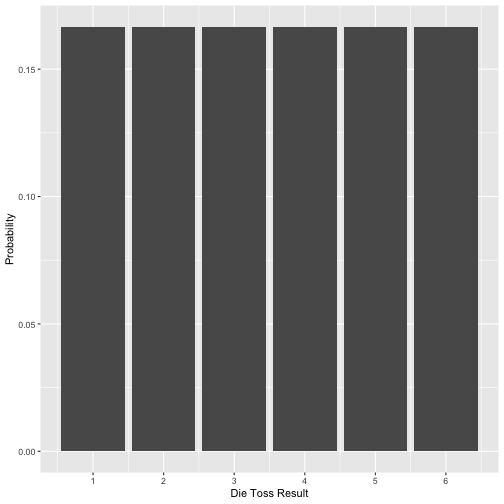

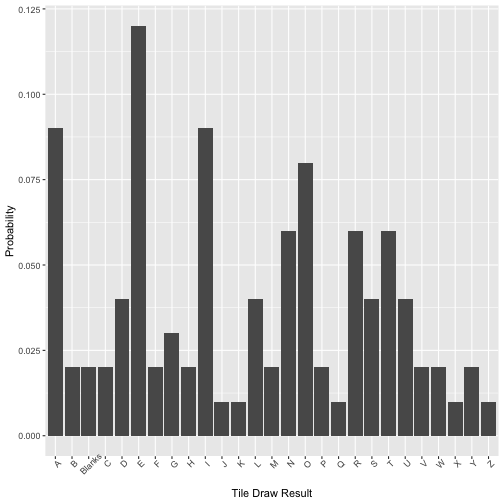

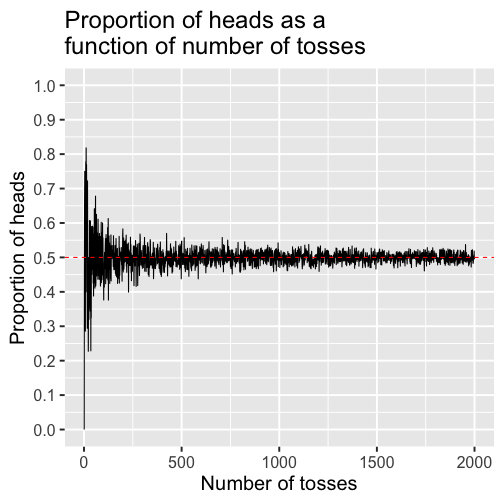

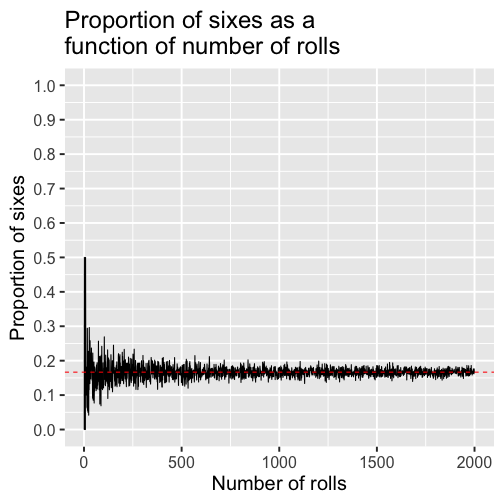

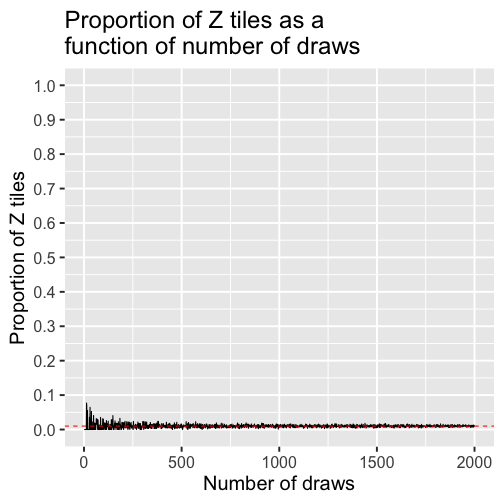

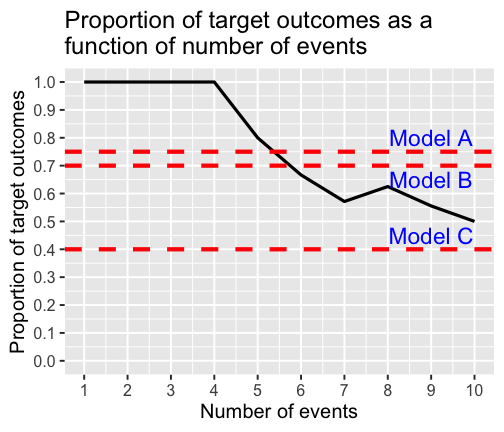

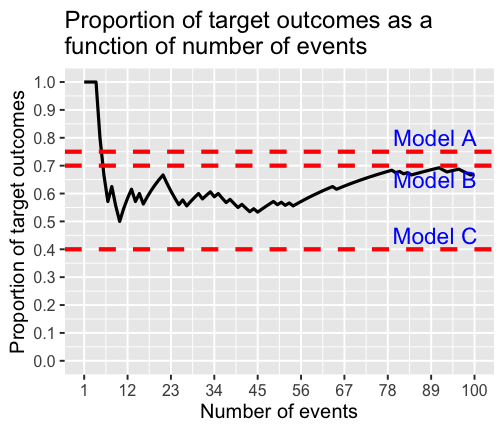

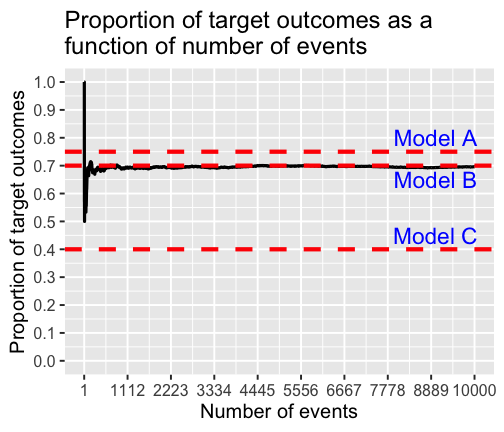

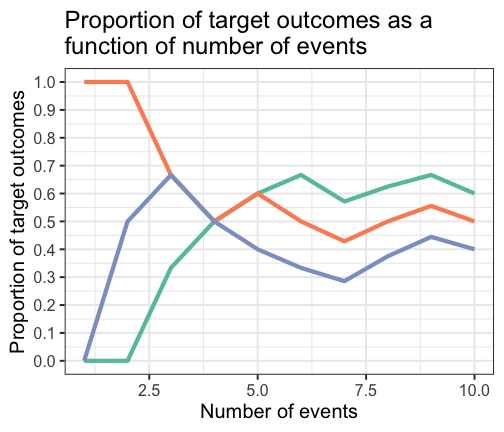

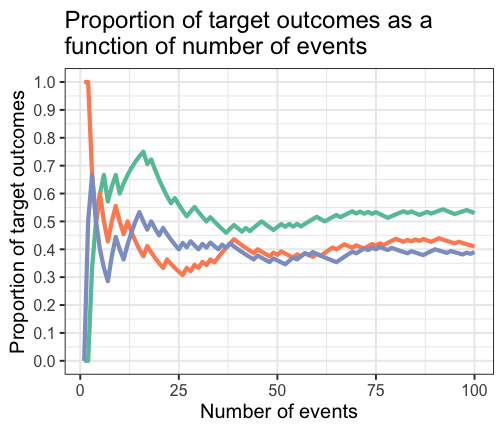

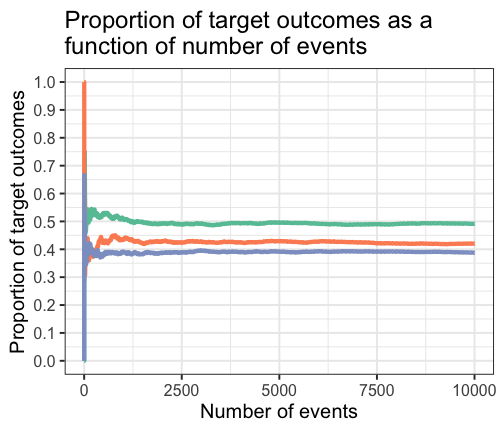

class: center, middle, inverse, title-slide # Probability and Probability Distributions --- ## Annoucements - Can I share your feedback with Sanjay? - Lab breakout groups --- In research, we wish to make inferences about the **unknown** state of the world based on **known** data we have in hand. We often accomplish this by determining how rare or unusual our data are under alternative models. On that basis we might reject some models as implausible. We might even be able to state how confident we are in that conclusion. This is **statistical inference**. The language of how rare or unusual (or common or typical) the data might be is the language of **probability**. --- ## Some terminology An **elementary event** is a member of the mutually exclusive and exhaustive outcomes that can happen when we make an observation. - For a coin toss, there are two elementary events. - For rolling a die, there are six elementary events. - For drawing tiles out of a Scrabble bag, there are 27 elementary events. -- Define simple experiment -- well defined act or process leading to simple outcome The **sample space** is the set of all possible elementary events. ??? Define mutually exclusive Define exhaustive --- *Absent additional information*, we assume that what happens from outcome to outcome, as we observe events in the sample space, is *random*. For that reason, the variable we define to represent the outcome of events in a sample space is called a **random variable**. ??? Random = we do not have enough information to know what will happen --- ### More terminology A **non-elementary event** is composed of two or more elementary events. - For rolling a die, even numbers are a non-elementary event composed of tosses resulting in 2, 4, or 6. - For drawing tiles out of a Scrabble bag, vowels are non-elementary events composed of drawing the tiles A, E, I, O, and U. -- The sample space can be divided into non-elementary events in multiple ways. - Scrabble tiles can be categorized as vowels and consonants (or both), but also according to their point values. --- ### More terminology Each elementary event (and non-elementary event) has a **probability** and the sum of these probabilities is 1. - This Law of Total Probability is another way of saying that the sample space is exhaustive—all possible events are included and the probability that one of them will occur for a given observation is 1. -- The display of the elementary events and their probabilities is called a **probability distribution**. - For coin tosses and die rolls, this distribution is discrete and uniform. - For Scrabble tile draws, this distribution is discrete and non-uniform. - Other distributions we will encounter are continuous. ??? Write on board: `$$P(A)$$` and describe --- class: center <!-- --> ??? Probability can be determined as: `$$\LARGE p(A) = \frac{n(A)}{N}$$` but only if the events are equally probable --- class: center <!-- --> --- Probability distributions have **parameters** that define the nature of the distribution. - These may be as simple as a single probability (die roll outcomes) or include additional information to describe, for example, variability (e.g., normal distribution). Once we know the parameters, we know everything we need to construct the sample space and the probability distribution. - We know what to expect in the data as we encounter outcomes in the sample space. --- ## Rules of probabilities `$$\Large \text{not A} = P(\neg A) = 1-P(A)$$` `$$\Large \text{A or B} = P(A \cup B) = P(A) + P(B) - P(A \cap B)$$` `$$\Large \text{A and B }= P (A \cap B) = P(A|B)P(B)$$` -- If the outcomes are independent (a common assumption), then: `$$\Large \text{A or B} = P(A \cup B) = P(A) + P(B)$$` `$$\Large \text{A and B }= P (A \cap B) = P(A)P(B)$$` ??? `\(\Large \neg\)` is the complement of A Independent means the the information that one event has occured does not affect the probability of the other event. --- ### Independence example Simple experiment: draw a card from a standard 52 card deck. - A is drawing a red card. - B is drawing a king. - Are A and B independent? -- Another example: - A is drawing a red card. - B is drawing a heart. - Are A and B independent? ??? Ask: what are the elementary events in this experiment? Ask: what are the non-elementary events? --- There are two fundamental ways to think about probability: the frequentist view and the Bayesian view. -- <p> </p> The more common **frequentist** view is sometimes called the "in the long run" view. It defines probability as what is expected to happen in the long run, if the event in question (e.g., tossing a coin, rolling a die) is repeated over and over. <p> </p> We know that a fair coin will come up heads 50% of the time: `\(P(H) = .5\)`. <p> </p> - A coin flipped a couple of times might come up heads both times, but a head flipped 1000 times would not likely come up heads 1000 times. *In the long run, the proportion of heads will converge on the expected probability.* --- ## Sara's bag 'o treats --- This "long run" view of probability means that in "short run" the outcomes will not behave as expected—the outcomes will show variability around the expected probability outcome. We can simulate what happens for a probability model in the short run. In many cases this behavior can be known mathematically. <p> </p> The utility here is that we know what the truth is and can see how closely we approximate it in the long run, but also see what happens in realistic short run circumstances: - Tossing a coin: proportion of heads, P(H) = .5 - Rolling a die: proportion of sixes, P(6) = .1666 - Selecting a Scrabble tile: proportion of Zs, P(Z)=.01 --- Simulation here will confirm what we know must be true given the defined model, but will also give a sense of how far off the mark short-run results can be. This is valuable because we never have an infinite series of events; rarely do we have very long ones. We live in a short-run world and need to know how that affects inferences that we want to make. ```r set.seed(101719) nsim = 2000 X = matrix(NA, ncol = 4, nrow = nsim) for(S in 1:nsim){ X[S,1] = S X[S,2] = rbinom(n = 1, size = S, prob = .5)/S X[S,3] = rbinom(n = 1, size = S, prob = 1/6)/S X[S,4] = rbinom(n = 1, size = S, prob = .01)/S } X = as.data.frame(X); names(X) = c("num", "coin", "die", "scrabble") ``` --- class: center ```r X %>% ggplot(aes(x = num, y = coin)) + geom_line() + geom_hline(aes(yintercept = .5), color = "red", linetype = 2) + scale_x_continuous("Number of tosses") + scale_y_continuous("Proportion of heads", breaks = seq(0, 1, .1), limits = c(0,1)) + ggtitle("Proportion of heads as a\nfunction of number of tosses")+ theme_gray(base_size = 20) ``` <!-- --> ??? When small samples of coin tosses are used, the proportion of heads can be off the mark by a fair amount. Knowing this is important when we flip the process around and ask, "how reasonable is a model that assumes P = .5, given what I have found in my data?" --- class: center ```r X %>% ggplot(aes(x = num, y = die)) + geom_line() + geom_hline(aes(yintercept = 1/6), color = "red", linetype = 2) + scale_x_continuous("Number of rolls") + scale_y_continuous("Proportion of sixes", breaks = seq(0, 1, .1), limits = c(0,1)) + ggtitle("Proportion of sixes as a\nfunction of number of rolls")+ theme_gray(base_size = 20) ``` <!-- --> ??? Note that the expected probability is lower for this event. It converges on the expected value faster. --- class: center ```r X %>% ggplot(aes(x = num, y = scrabble)) + geom_line() + geom_hline(aes(yintercept = .01), color = "red", linetype = 2) + scale_x_continuous("Number of draws") + scale_y_continuous("Proportion of Z tiles", breaks = seq(0, 1, .1), limits = c(0,1)) + ggtitle("Proportion of Z tiles as a\nfunction of number of draws") + theme_gray(base_size = 20) ``` <!-- --> ??? And even faster here. All of these events are binary. The behavior we see in the outcomes (expected value and variability) is related to the expected value. The binomial distribution will formally define this behavior. --- The frequentist view is popular because it is objective. The events in question are observable and the definition of probability (proportion of an event in the long run) is calculated in the same way by everyone. <p> </p> On the other hand, in real life, "the long run" may not have a simple or realistic meaning: <p> </p> "The probability that Sara's dog is on the bed behind her is .8, or 80%." <p> </p> At one level, we all have an intuitive feel for what this means and understand it to tell us the dog is likely in view. --- But, in the strict sense, that statement doesn’t have a sensible meaning in the language of a frequentist view. <p> </p> The dog either is or is not on the bed (there are only two outcomes in the sample space); it makes no sense to say that the dog is on the bed 80% right now and the event is not repeatable (the dog on the bed right now) in the strict sense, so the “long run” doesn’t seem to apply. <p> </p> Instead, we have to say something like, on days like today (which can be repeated), the probability that the dog is on the bed is .8. <p> </p> Probability and statistics involve many such convenient fictions. What are the implications? ??? They allow us to get on with the job of forecasting from models to data or making inferences when going from data to models. --- The link between probability and statistics is clear when we make the move to inference—the major task in science. <p> </p> In probability, the model (the probability distribution, the generating function) is known and informs us what will be true about the data. <p> </p> In statistical inference, the model is not known and we let the data inform us about the plausibility of different models that might be true. <p> </p> We have to make assumptions about those models in order to sensibly answer the inference question. --- If this coin is fair (proposed model), I expect the proportion of heads to be .5 in the long run. <p> </p> After a long run (data collection), I find the proportion of heads to be .75. I reject the "fair coin model." I could be wrong but will try to limit the mistakes. <p> </p> This is statistical inference. It relies upon assuming some generating process for the events (a model), typically defined by a theoretical probability distribution (here the binomial). That framework allows us know the likelihood that we are wrong in our inference, especially in the short run. <p> </p> Probability and probability distributions provide a frame of reference for making inferences. --- ## Example: Which model? Three competing models are available to explain the occurrence of a target event. - Model A: `\(p(E) = .70\)` - Model B: `\(p(E) = .75\)` - Model C: `\(p(E) = .40\)` I assume that this target event follows a binomial probability distribution. If that model is correct, at what point can I distinguish the models and declare a winner? --- class: center <!-- --> Three competing models are available to explain the occurrence of a target event. I assume that this target event follows a binomial probability distribution. If that model is correct, at what point can I distinguish the models and declare a winner? --- class: center <!-- --> Three competing models are available to explain the occurrence of a target event. I assume that this target event follows a binomial probability distribution. If that model is correct, at what point can I distinguish the models and declare a winner? --- class: center <!-- --> Three competing models are available to explain the occurrence of a target event. I assume that this target event follows a binomial probability distribution. If that model is correct, at what point can I distinguish the models and declare a winner? --- class: center ## Example: Same model? My theory claims that the same underlying process (an assumption, here the binomial) governs the outcomes in three populations. At what point can I make a confident claim about that assertion? --- class: center <!-- --> My theory claims that the same underlying process (an assumption, here the binomial) governs the outcomes in three populations. At what point can I make a confident claim about that assertion? ??? Are the data in these populations generated by the same underlying process (model)? --- class: center <!-- --> My theory claims that the same underlying process (an assumption, here the binomial) governs the outcomes in three populations. At what point can I make a confident claim about that assertion? ??? Are the data in these populations generated by the same underlying process (model)? --- class: center <!-- --> My theory claims that the same underlying process (an assumption, here the binomial) governs the outcomes in three populations. At what point can I make a confident claim about that assertion? ??? Are the data in these populations generated by the same underlying process (model)? --- ### Distributions and their distinctions. **Population distribution:** the usually hypothetical set of all possible measurements. <p> </p> - In the frequentist view this would be an infinitely long series of events. <p> </p> - In practice, we have to settle for “really long.” <p> </p> <p> </p> **Sample distribution:** the set of measurements in hand, assumed to be a random sample from the population. <p> </p> - I will use statistics about the sample to infer what is true about population parameters. --- ###Distributions and their distinctions. **Sampling distribution:** Any sample will be off the mark in the value of a statistic relative to the population. These statistic values will have a distribution across different random samples of the same size from the same population. The variability in that distribution will tell us about the precision of the sample as a population estimate and the confidence we can have in claims we make about parameters. <p> </p> - Long run version (repeated sampling interpretation) <p> </p> - Theoretical version *More on both of these when we talk about sampling.* --- ## Bayesian statistics In contrast to the frequentist view, the Bayesian view takes prior beliefs into account in determining the probability of an event. - It is a model of rational thinking that adjusts current beliefs about the probability of an event given previous knowledge or beliefs. - Because those prior beliefs could come from anywhere, the approach is sometimes labeled "subjectivist," but it need not be hopelessly subjective. The key contribution can be summarized in Bayes’ Theorem: `$$\Large \text{A given B} = P(A|B) = \frac{P(B|A)P(A)}{P(B|A)P(A) + P(B|\neg A)P(\neg A)}$$` --- ### Bayesian example I have the personal theory that, generally speaking, Bayesians are smug. I meet a smug person. What is the probability that I have met a Bayesian? `$$\large \text{Bayesian | Smug} = P(B|S) = \frac{P(S|B)P(B)}{P(S|B)P(B) + P(S|\neg B)P(\neg B)}$$` `\(P(B) = .1 \text{(Bayesian are not common, overall)}\)` `\(P(S|B) = .8\)` `\(P(S|\neg B) = .3\)` `$$\large \text{Bayesian | Smug} = P(B|S) = \frac{(.8)(.1)}{(.8)(.1) + (.3)(.9)} = 0.229$$` --- `\(P(B) = .1\)` `\(P(S|B) = .8\)` `\(P(S|\neg B) = .3\)` `\(P(B|S) = 0.229\)` From a pure baserate standpoint I should assume the probability is .1 that this person is a Bayesian. Bayesian reasoning tells me to take my prior beliefs into account given what I believe to be true about the relation of smugness to Bayesian status. <p> </p> -- But, it also tells me not to be too enthusiastic about this Bayesian attribution. I should not just flip around my conditional probability of "smugness given Bayesian" and claim the probability is .8 that I’ve just encountered a Bayesian. -- <p> </p> In the language of Bayes Theory, P(B) is called the **prior probability**, P(B|S) is the **posterior probability**, which is an adjustment of P(B) in the face of additional information (smugness). --- The liberal sprinkling of the term, belief, in all this is what garners the label, subjectivist. <p> </p> There is no need for P(B) or P(S|B) to be point estimates. We can express uncertainty about these beliefs by making them *probability distributions*. The result is a posterior probability distribution. I can use actual data to help me decide which of several different models about Bayesian-smugness beliefs is the better account. <p> </p> There is no controversy when the prior information is based on solid scientific evidence. --- ## Another Bayesian example My doctor calls me with the results of a diagnostic test for a very rare but always fatal medical condition (not COVID). I ask about the nature of the test and he tells me it has a sensitivity of .99 and specificity of .99, based on a large number of clinical studies. How worried should I be? What is the probability that I will actually get this disease? -- Assume that in the population, 1 out of every 10,000 people gets the condition: P(Disease) = .0001. The sensitivity tells me that P(Positive Test|Disease) is .99. The specificity tells me that P(Positive Test|No Disease) is .01. What is the probability that I will get this disease given that I have a positive test? P(Disease|Positive Test) = .0098 ??? Before transitioning, need to know probability of rare disease. `$$\frac{(.99)(.0001)}{(.99)(.0001)+(.01)(.9999)}$$` --- ## `\(p\)`-values Soon enough, we'll get to how `\(p\)`-values are derived from data. But it's worth bringing them up now, because they are statments about probability. What are `\(p\)`-values representing the probability of? -- Which view of probability, frequentist or Bayesian, are `\(p\)`-values associated with? --- ## Likelihood under different views .pull-left[ ### Frequentist `$$p = P(\text{Data}|H_0)$$` ] .pull-right[ ### Bayesian `$$\text{Bayes Factor} = \frac{P(\text{Data}|H_A)}{P(\text{Data}|H_0)}$$` ] Which is right? * `\(p\)`-values and Bayes Factor are highly correlated ([Wetzels et al., 2011](../readings/Wetzels_etal_2011.pdf)). ??? Note that `\(H_A\)` can be a range of possible parameters, in which case, you get the weighted probability across many, or the integral if a continuous range. --- <img src="images/BF_pvalue.png" width="75%" /> .small[([Wetzels et al., 2011](../readings/Wetzels_etal_2011.pdf))] ??? But major disagreement in conclusion. - When `\(p\)`-value falls between .01 and .05, there is a 70% chance that Bayes Factor suggests only anecdotal evidence in favor of alternative. Take-away -- Bayes Factor is more conservative when it comes to finding evidence against the null hypothesis. Is that better, or worse? --- class: inverse ## Next time... binomial distribution **Remember to complete Journal 3 on Canvas.**