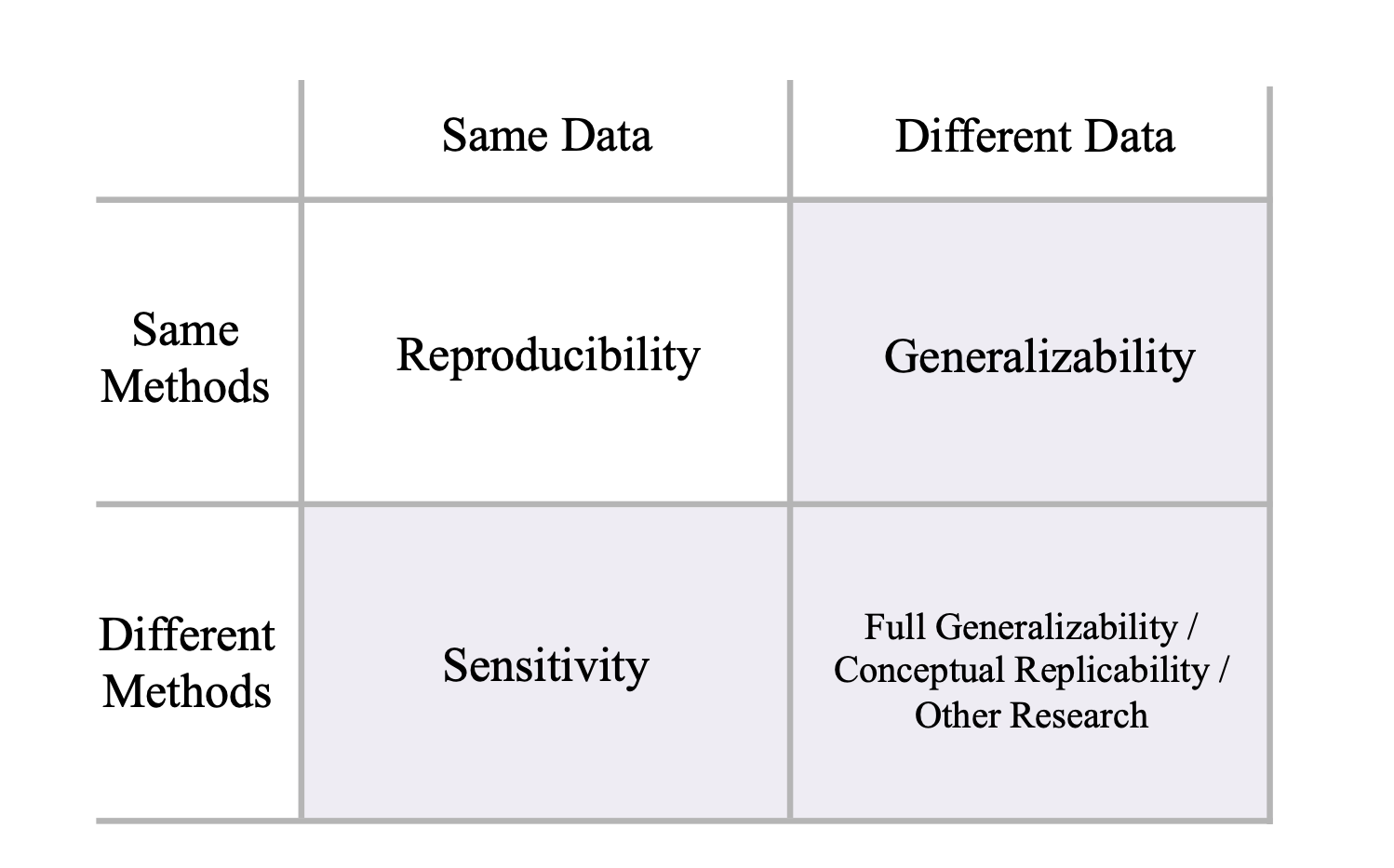

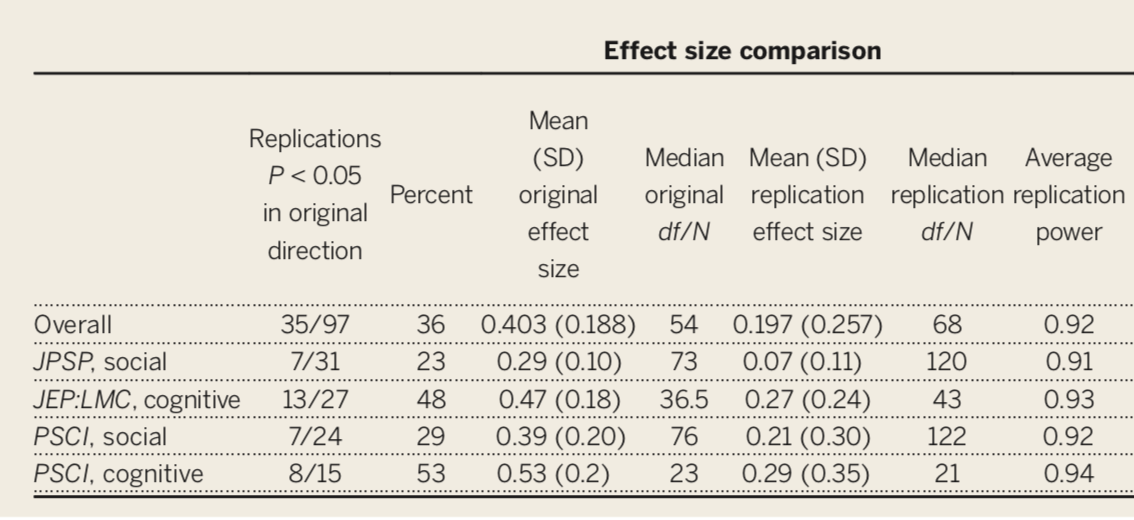

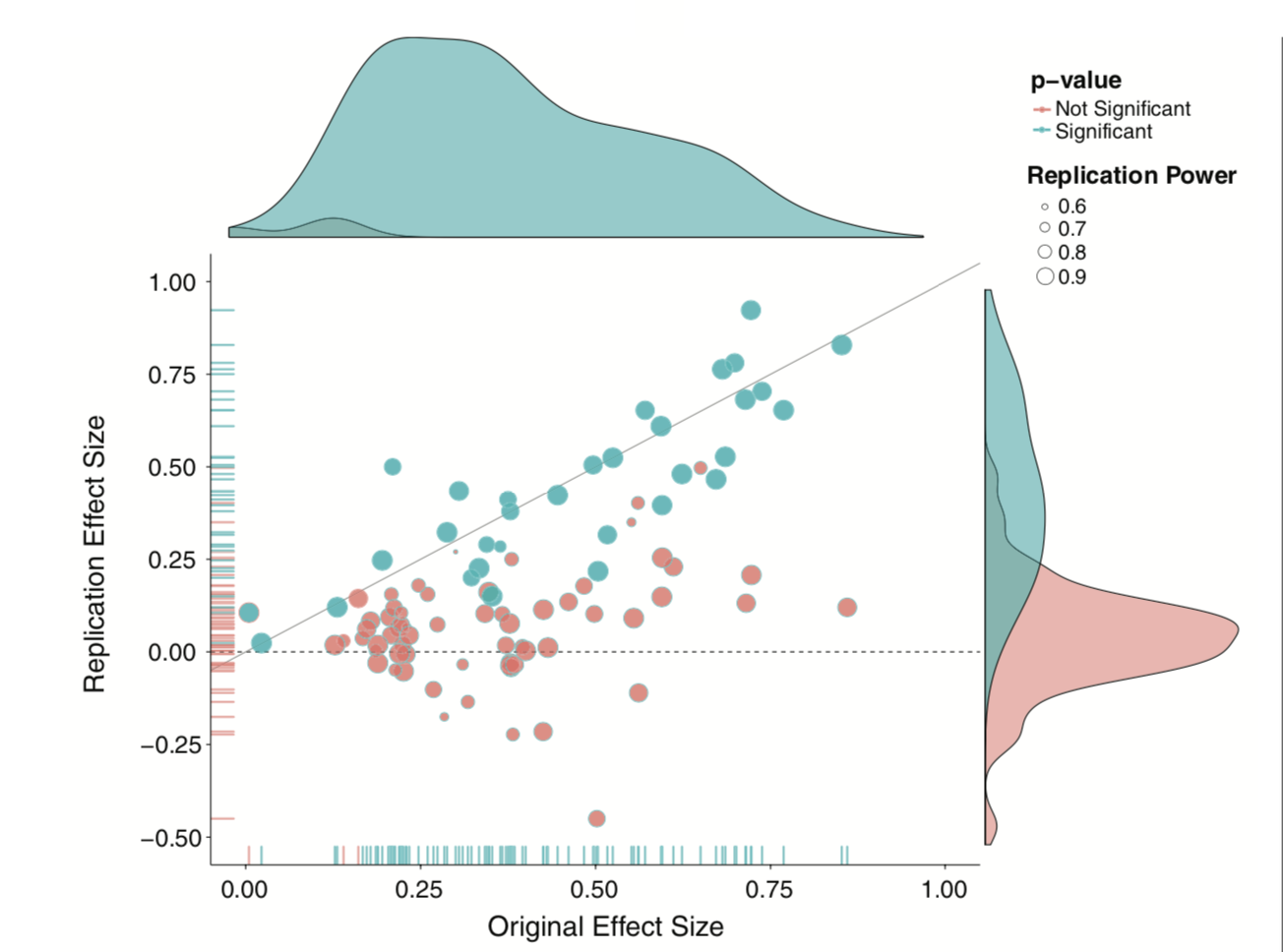

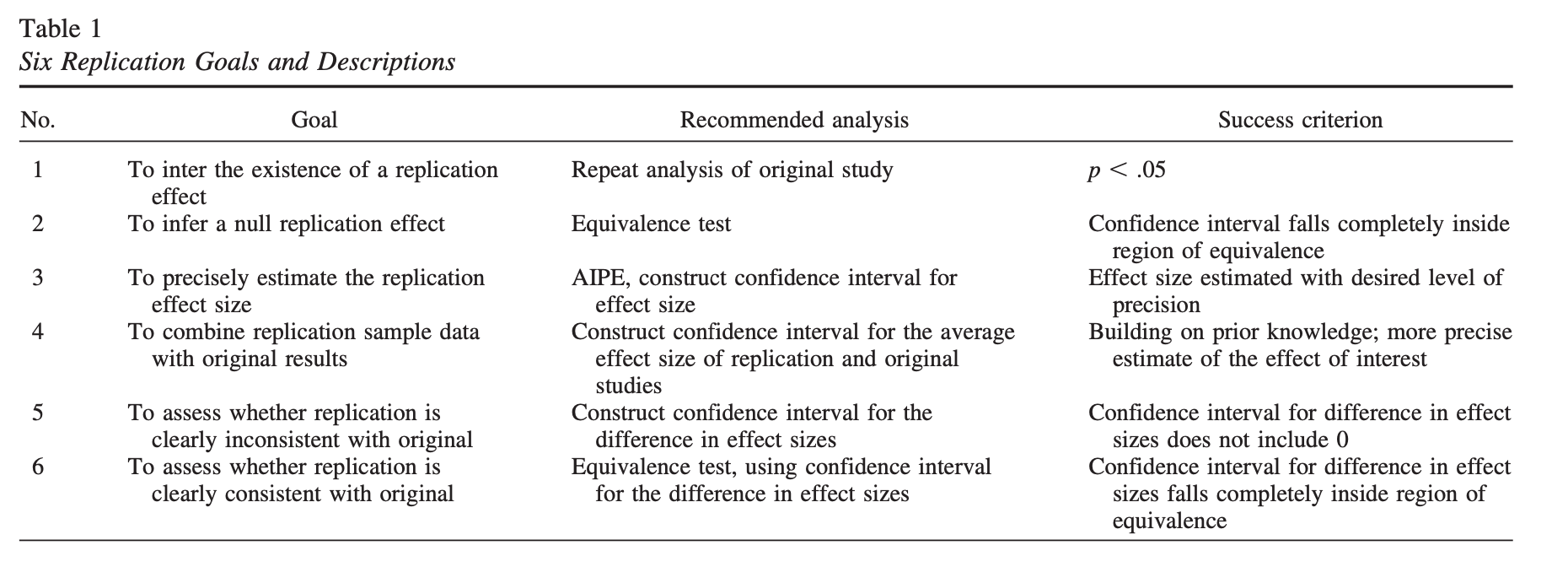

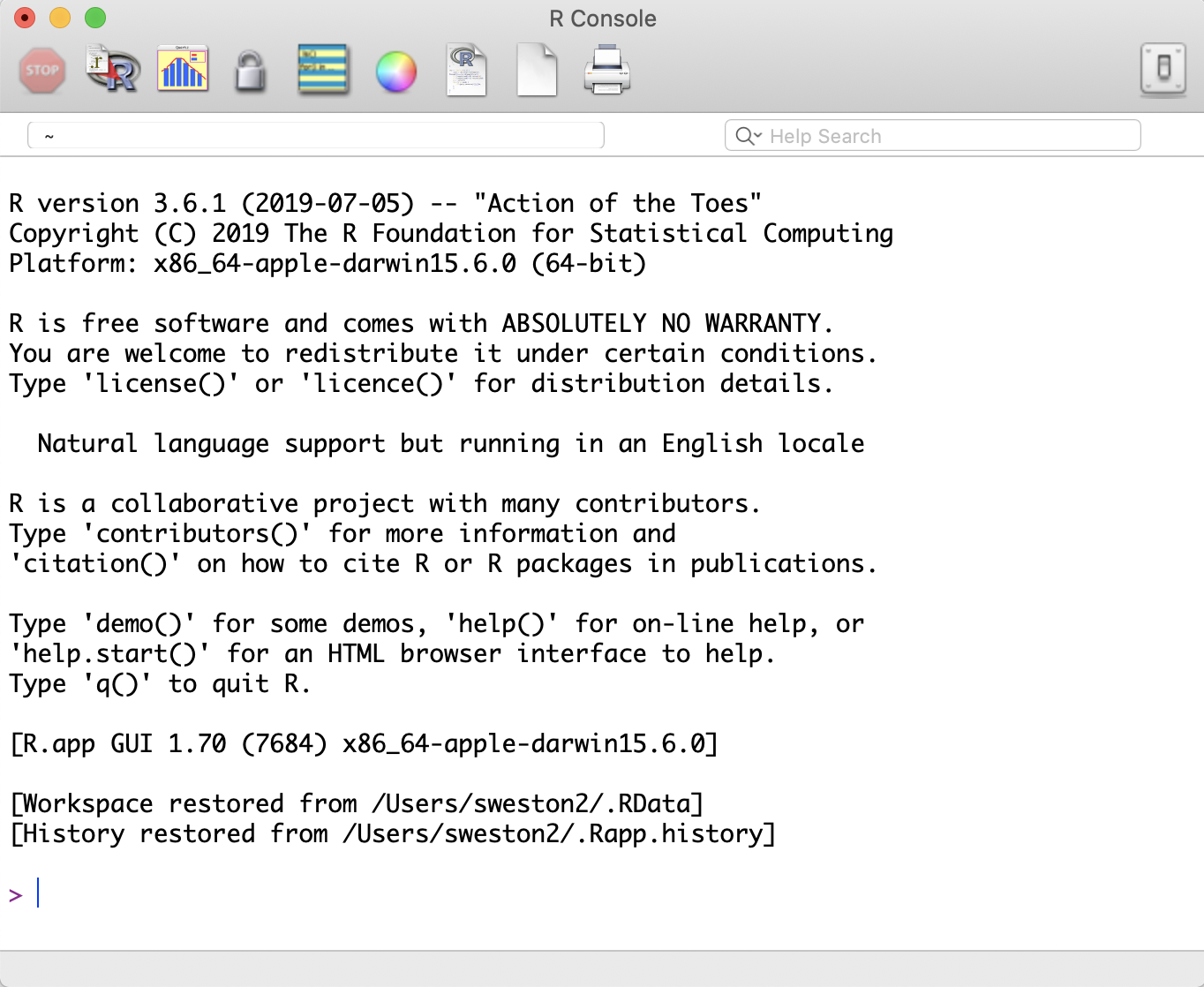

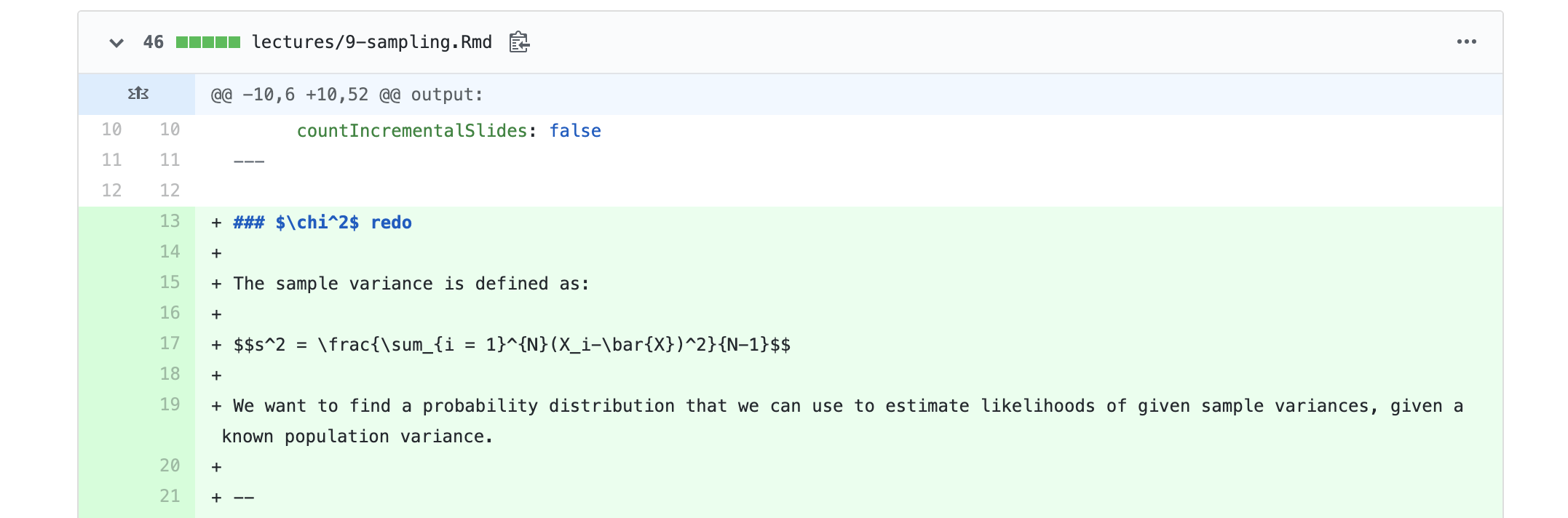

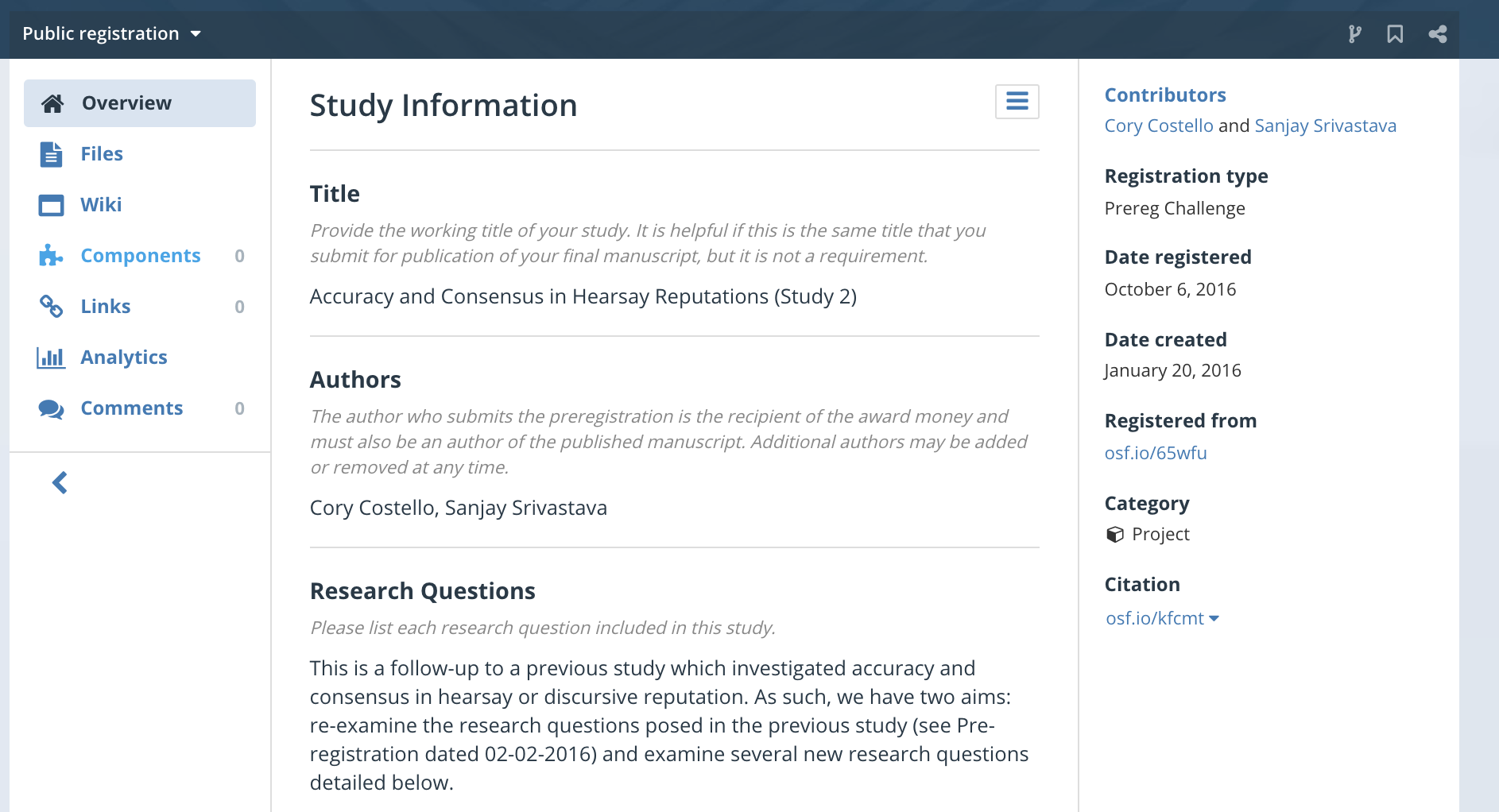

class: center, middle, inverse, title-slide # Open Science --- ## Last time... Critiques of NHST - Misinterpretation of p-values - `\(p\)` is the `\(p(D|H_{0})\)` not `\(p(H_0|D)\)` - Promotes binary thinking - Easily gamed in the pursuit of incentives - Recall Goodhart's Law --- # _p_-curve _p_-curve made it clear that if our null hypotheses were indeed false, we would most often see very small _p_-values. Importantly, this also signalled that literatures (or papers) that contained lots of p-values between .01 and .05 were relatively unlikely, unless there were some fishing expeditions going on. --- The publication of "False Positive Psychology" and the p-curve paper, following the claim by [Ioannidis (2005)](../readings/Ioannidis_2005.pdf) that as many as half of published findings are false prompted researchers to take a second look at the "knowns" in our literatures. If we can demonstrate these "known" effects, then we're ok. Our effects are most likely true. -- And if that had happened, we probably wouldn't have two lectures in this class dedicated to problems with NHST and how to address them. --- ## A quick digression...  .small[[Condon, Graham, Mroczek, 2018](https://psyarxiv.com/2fn5x/)] --- The inability to replicate published research has been viewed as especially troubling. - This has been a long-standing concern, but the poster child is undoubtedly ["Estimating the reproducibility of psychological science"](../readings/OSC_2015.pdf) by the Open Science Collaboration (Science, 2015, 349, 943). --- Only 36% of the studies were replicated, despite high power and claimed fidelity of the methods.  ---  --- ###Why is it so hard to replicate? - Poor understanding of context necessary to produce most effects - We do not recognize the boundary conditions of effects especially when the limiting conditions are kept constant - Incomplete communication of the necessary conditions - Akin to reading just the first few ingredients for a recipe and then trying to duplicate the dish. --- ###Why is it so hard to replicate? Sparse communication fosters belief by others that effects are simpler and easier to produce than they really are. The reality is that key elements have been left out: - specific methodological or analytic details - and the tests run before and after the ones that were published. --- Regarding replications, there's an additional methodological challenge to grapple with. -- **Example:** - The original study `\((N = 25)\)` found a significant effect, `\(\bar{X} = 20\)` (95% CI [5, 35]). - The second study `\((N = 40)\)` does not find a significant effect, `\(\bar{X} = 10\)` (95% CI [-1, 21]). - Did the second study replicate the first? --- There is disagreement about what counts as "replicating the effect." - Both studies significant? - Significant difference in effect size?  .small[Anderson & Maxwell [(2016)](../readings/anderson_2016.pdf)] --- Ultimately, much of the problems, both pre-2011 and since, have stemmed from an over-reliance on _p_-values ([Anderson, 2019](../readings/anderson_2019.pdf)). This is a point that bears repeating, early and often: **Science cannot use a single metric to evaluate the existence or importance of effects and theories.** - Detectives don't make a case on a single clue. - Doctors don't diagnosis illness using a single symptom. - You shouldn't rely on a single number either. - Plus, we need to stop relying on single studies. --- ## What kind of challenges will need to be addressed? - Institutionalization of CI and ES but not `\(p\)` - Journals must change their values - How do we shift media values? Replication is not “sexy.” - How do we shift academic values? What will be the impact on tenure? How do we re-calibrate “productivity?” Citation indices? --- The inability to replicate much work has been viewed as a singular failure of psychology as a science. - But is that the best way to view it? As data, the replication crisis, it is potentially quite informative and suggests some importance features of psychology as a science. At a minimum, it tells us we don’t understand a phenomenon as well as we think we do. That is hardly a failure of science, unless we don’t take the next steps to resolve our ignorance. --- Failures to replicate are surprising, even troubling. We think of them as unfortunate occurrences. Bad luck even. But should they be viewed that way? Surprises in science have a long history of playing a key role in scientific progress. --- A failure to replicate should be viewed as particularly interesting. > It is time to insist that science does not progress by carefully designed steps called "experiments" each of which has a well-defined beginning and end. Science is a continuous and often disorderly and accidental process. A first principle not formally recognized by scientific methodologists: when you run onto something interesting, drop everything else and study it. --B. F. Skinner (1968) *A case history in scientific method* --- ## Today... The open science movement (re:statistics) - Advancements in programming help researchers identify statistical problems - A push for new metrics in psychology research - Attempts to quantify the state of the field - Development of new research practices to reinvest in old statistics - Development analytic techniques First, some history --- ## Open Science Movement Sometimes labelled **"the reform movement"** -- (psychological) scientists trying to address problems in the field identified as part of the replication crisis. (Note, these are not distinct periods of history, nor are either of them considered over.) Within psychology, much of the force behind this movement has been driven by social and personality psychologists. - **Open** because one of the primary problems of the replication crisis was the lack of transparency. Stapel, Bem, everyone did work in private; kept data secret; buried, hid, lost key aspects of research. Opaque was normal. --- ### Dates 2011-2012 -- Bem ESP paper, False Positive Psychology paper, Diederik Stapel fraud case, Hauser fraud case, Sanna fraud case, Smeesters fraud case. - an interesting time to start graduate school... 2013 -- Center for Open Science is founded 2016 -- Inagural meeting of the Society for the Improvement of Psychological Science. 100 attendees. - Attendees include four UO Psych members: Grace Binion, Rita Ludwig, David Condon, and Sanjay Srivastava --- ## 1. We identify problems New methods of conducting science on science -- often developed to find fraud, but super useful for detecting errors * [Granularity-related inconsistency of means (GRIM)](http://www.prepubmed.org/grim_test/) test -- is the mean mathematically possible given the sample size? * [Sample Parameter Reconstruction via Iterative TEchniques (SPRITE)](https://hackernoon.com/introducing-sprite-and-the-case-of-the-carthorse-child-58683c2bfeb) -- given a set of parameters and constraints, [generate](https://steamtraen.shinyapps.io/rsprite/) lots of possible samples and examine for common sense. * [StatCheck](http://statcheck.io/) -- upload pdfs and word documents to look for inconsistencies (e.g., statistic, df) --- ## 1. We identify problems Conceptually, psychologists have struggled with the [interpretation of p-values](https://fivethirtyeight.com/features/not-even-scientists-can-easily-explain-p-values/). Even worse, we seemed to forget some of the most basic assumptions of our statistical tests. - What must be true about our trials to use a binomial distribution? .small[Remember p-curve?] --- ## 2. A push for "new" metrics in psychology research (2014) Disillusionment with _p_-values led many to propose radical changes. But the field is ruled by researchers who made their name using NHST (and very likely, many of them also used these fishing-methods to build their careers). So a softer approach was more widely favored. [Cumming (2014)](../readings/Cumming_2014.pdf) proposes the "new statistics" which emphasize effect sizes, confidence intervals, and meta-analyses. --- Except... Confidence intervals are based on the same underlying probability distributions as our p-values. And what's the problem with meta-analyses? --- ## 3. Attempts to quantify the state of the field [The Reproducibility Project (2015) ](https://www.theatlantic.com/science/archive/2015/08/psychology-studies-reliability-reproducability-nosek/402466/) finds that most findings in psychological science don't replicate. Also, [new methods](../readings/Meta-analyses-are-f.pdf) for approaching meta-analyses are studied and popularized. - There is a growing realization that meta-analyses cannot fix the problem -- publication bias (and p-hacking) have polluted the waters. --- # Some more drastic changes ## 4. New(ish) research practices - New(ish) tools for improving reproducibility - New(ish) tools for reducing the file drawer problem - New(ish) tools for limiting _p_-hacking - New(ish) tools for limiting publication bias --- ### Developing norms (not new) - A _major_ challenge to both reproducibility and robust science is how opaque other peoples' data and analsyes are. - Fix by having standards and developing methods for communicating details. - Brain Imaging Data Structure (new!) **([BIDS](https://bids.neuroimaging.io/))** - [codebook](https://cran.r-project.org/web/packages/codebook/index.html) R package --- ### R (not new) .pull-left[ * Use of scripts -- data analysis is **reproducible** - Don't be your own worst collaborator * Software is open-source - **Equity** in terms of who can use the software - **Equity** in terms of who can build the software! - Newest statistical methods are available right away ] .pull-right[  ] --- ### RMarkdown (Not that new) Combination of two languages: **R** and Markdown. Markdown is a way of writing without a WYSIWYG editor -- instead, little bits of code tell the text editor how to format the document. Increased flexibility: Markdown can be used to create - presentations (this one!) - manuscripts - CVs - books - websites By combining Markdown with R... ---  --- ### Git (not new) **Git** is a version control system. Think Microsoft Track Changes for your code.  * Additionally allows multiple collaborators to contribute code to the same project. --- ### GitHub (also not new) * GitHub is one site that facilitates the use of Git. (Others exist. But they don't have OctoCat.) * Repositories can be private or public -- allow you to share your work with others (reproducible) * GitHub also plays well with the Markdown language, which is what you're using for your homework assignments. - You can [link GitHub repositories to R Projects](https://happygitwithr.com) for near seamless integration. - Pair GitHub and R to make websites! (Interested? Have 4 hours to kill? I recommend looking through [Alison Hill's workshop on blogdown](https://arm.rbind.io/days/day1/blogdown/) and the [tutorial prepared by Dani Cosme and Sam Chavez](https://robchavez.github.io/datascience_gallery/html_only/websites.html)). --- ### Open Science Framework (OSF.io) * Another repository, also includes version control * Reproducibility * Doesn't use code/terminal to update files * Drag and drop, or linked with other repository (Dropbox, Box, Google Drive, etc) * Also great for collaborations * Easy to navigate * Can be paired with applications you (should) already use --- ### Reducing file drawer: PsyArXiv .pull-left[  ] * preprint = the pre-copyedited version of your manuscript * journals have different policies regarding what you can post. It's always a good idea to [check](http://sherpa.ac.uk/romeo/index.php). --- ### Preregistration OSF also allows you to preregister a project. **[Preregistration](https://www.theguardian.com/science/blog/2013/jun/05/trust-in-science-study-pre-registration)** is creating a time-stamped, publicly availble, frozen document of your research plan prior to executing that plan.  --- ### Limit p-hacking: Preregistration #### Goal: Make it harder to _p_-hack and HARK * Did the researcher preregister 3 outcome variables and only report one? * Did the research *actually* believe this correlation would be significant? -- #### Goal: distinguish data-driven choices from theory based choices * Was the covariate included because of theory or based on descriptive statistics? -- #### Goal: correctly identify confirmatory and exploratory research * Exploratory research should be OK! * Protection against editors and reviewers --- ### Limit p-hacking and publication bias: Registered reports **Registered reports (RR)** are a special kind of journal article <img src="images/RR.png" width="80%" /> .small[image credit: [Doropthy Bishop](https://blog.wellcomeopenresearch.org/2018/12/04/rewarding-best-practice-with-registered-reports/)] --- ## 5. Development of new(ish) analytic techniques More psychologists have embraced **machine learning methods** - These largely move us away from traditional p-values and decision making becomes predicated on the ability of theoretical models to predict new sets of data. - How accurate does your model need to be for you to accept a relationship? - Most machine learning techniques adjust for over-fitting, or essentially capitalizing on the random error in your sample --- ## 5. Development of new(ish) analytic techniques **Sensitivity analyses** and [**multiverse analyses**](https://www.psychologicalscience.org/observer/run-all-the-models-dealing-with-data-analytic-flexibility) test the robustness of a finding. These often including testing every possible set of covariates or subgroups, to see whether a particular finding is just because of a unique combination of variables and people. --- ## Criticisms Certainly there are some who argue that open science methods are broadly harmful: stifle creativity, slow down research, incentivize *ad hominem* attacks and "methodological terrorists", and encourage data parasites. It is worth admitting that, unless incentive structures don't change, there can be harm done with adoption of these methods. But part of the goal is to change the overall system. -- A more legitimate criticism is that adoption of these methods can fool researchers into believing that all research using these methods is "good" and all research not using them are "bad." A timely example: ["Preregistration is redundant, at best." (Oct 31, 2019). Szollosi et al.](https://psyarxiv.com/x36pz/) -- And it's worth adding that this kind of work can take longer. --- class: inverse ## Next time... One-sample tests